WebSite Auditor does not find some of my pages

Normally, the default WebSite Auditor setup works perfectly fine to crawl a website in full and gather all the pages. In certain cases though, you may need a bit of fine-tuning to gather all site's resources.

If you struggling to collect all the pages of your website with WebSite Auditor, follow the steps below:

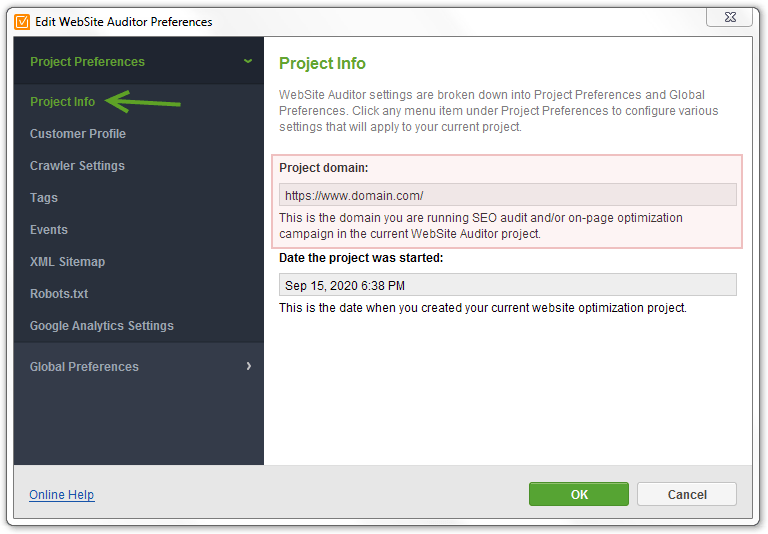

1. Make sure the Project URL is correct

- Check whether you've used the correct prefix: you'd rather create the project for the canonical version of your domain (http/https, www/non-www)

- Make sure you've created the project for the root domain and not the exact page URL

* even if your actual homepage is, say, domain.com/index.php, make sure you create the project for domain.com. Otherwise, WebSite Auditor will only consider the pages that contain the Project URL in full (domain.com/index.php/...) as the website's own pages.

Please note that the Project URL is not editable once the project is created. If you've used the wrong URL, simply create a new project for the proper one.

2. Disable following Robots.txt Instructions

By default, WebSite Auditor follows robots.txt rules (the default SEO PowerSuite bot is a generic one).

If some of the pages/recourses are restricted from indexing (with robots.txt, noindex meta tag, or X-Robots rule in HTTP Header), their URLs won't be further crawled and will land under All Resources with the corresponding label in Robots.txt Instructions column.

To collect all the pages, disregarding their indexation rules, disable the option to Follow Robots.txt Instructions in Expert options upon creating/rebuilding the project:

3. Collect Orphan Pages

WebSite Auditor crawls websites by starting from the homepage (project domain URL) and by following each and every internal link. If some of the pages are not properly linked to the website, they won't be found upon crawling the website directly.

To find such 'orphans' and ensure proper internal linking, enable the Search for Orphan pages option under Expert options (search across Google's Index, your Sitemap, or both):

Orphans will have the corresponding Orphan and Source labels in the Tags column.

- Pages that are linked together while none is linked to the website - are still orphans.

- Orphan tags are not being removed automatically upon Rebuild. If you've linked an orphan page to the site, make sure to delete the tag manually - otherwise, it will remain after a Rebuild.

- It's OK to have orphaned 404 pages from Google's Index - as you delete a page and remove all internal links to it, it may take some time for Google to deindex it naturally.

4. Reset the Scan Depth limit

Some of the pages may not be collected if you've set a Scan Depth limit. The app will only crawl and collect all the pages x clicks far from the homepage (Project URL).

You can check/reset the Scan Depth limit under Expert Options:

5. Enable JavaScript

If your website is built with Ajax or features any JavaScript-generated content, make sure to enable the app to Execute JavaScript to access and crawl the content in full.

Make sure you set the timeout that would be enough for JS execution depending on your website specifics.

6. Collect URLs with parameters

By default, WebSite Auditor will gather the pages with certain (most commonly used) URL Parameters, but there's also the option to Ignore All. In this case, the app will treat all pages with different URL parameters as the same page, and will only collect the 'naked' URL.

Navigate to URL Parameters under Expert options, and set the app to Ignore Custom URL Parameters (optionally, upload your site's custom parameters list directly from Google Search Console):

We don't recommend disabling the option altogether though - with e-commerce websites, for instance, this may lead to endless crawling of the cart/product pages with all kinds of filtering applied.

Comments

0 comments

Please sign in to leave a comment.