What do I do if WebSite Auditor freezes/runs out of memory?

Same as each SEO PowerSuite app, WebSite Auditor is built on Java and has to process a serious amount of data. Naturally, the app may happen to be resource-demanding.

To prevent heavy load on an average system, RAM consumption in our tools is limited to ~1-4GB by default (depending on the OS and other peculiarities), regardless of how much RAM you individually have.

For that reason, if you're working on a heavy project, have a lot of unnecessary projects open, or multiple Java-based apps running, the apps may eventually run out of memory and freeze or crash.

Yet there are several ways how you can overcome that.

Extend the default memory limit

You can prevent the app from running out of memory by increasing the default limit in accordance with your actual system capabilities.

For instance, extending the memory limit to 3500 MB (having 4GB RAM) will let the software handle sites with up to 50K pages. The more RAM you have, the higher is the value you can set.

Limit the number of scanned URLs

Some older PCs may only feature 2GB RAM - extending the default memory limit won't do in this case. To make it possible for the app to crawl a large website, you'll need to set reasonable limitations to the crawling itself using the options below:

Upon creating or rebuilding a project, enable Expert options:

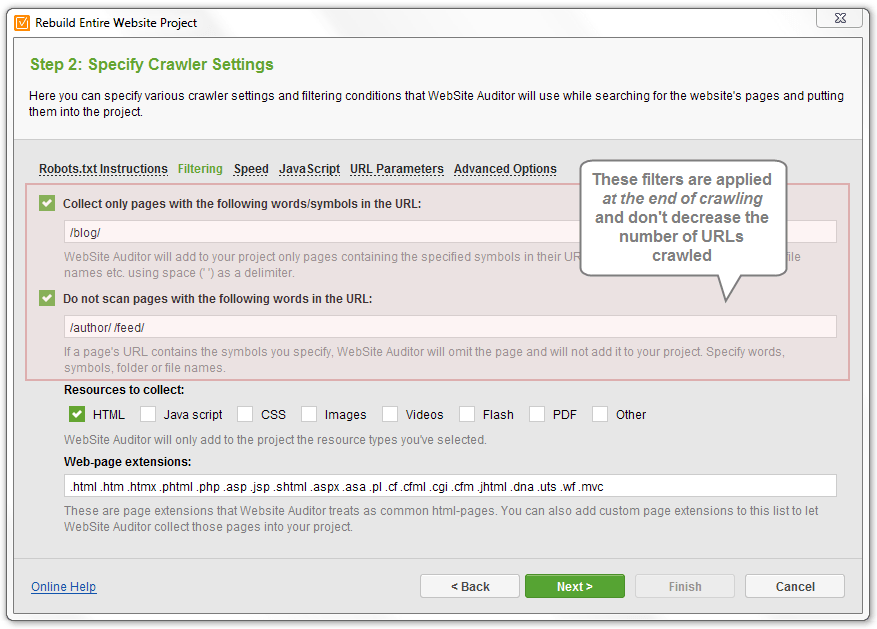

- In the Robots.txt Instructions section, you can limit the scan depth (scanning a site 2-3 clicks deep, you should collect the most important/core pages)

- In the Filtering section, you can exclude some of the resources from being collected to your project (only leave HTML, or exclude certain resources: scripts, images, videos, PDFs, etc.)

- In the URL Parameters section, set the app to Ignore ALL Paramteres to prevent it from collecting endlessly-generated dynamic URLs

Please note that setting up URL Filters won't make any difference in terms of memory consumption - all the pages and resources will still be crawled, since the ones that have to be excluded may contain links to the other ones that don't fall under condition; therefore, the URLs will only be excluded at the end of the crawling process.

Comments

0 comments

Please sign in to leave a comment.